Accelerating AI with Real-Time Data: The Confluent–Databricks Partnership and Tableflow Innovation

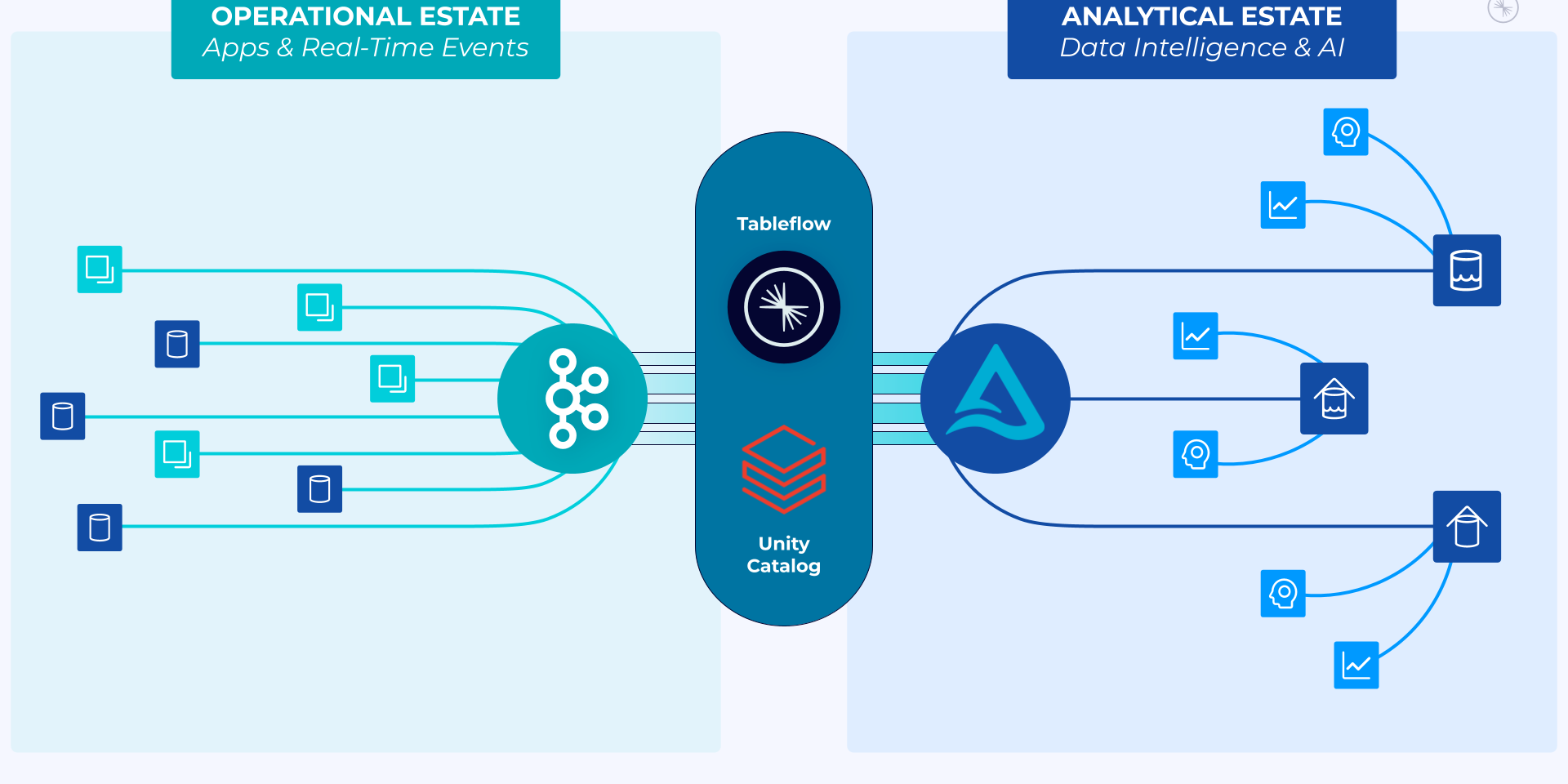

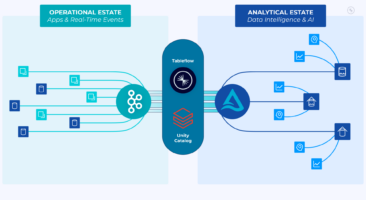

In today’s competitive landscape, speed is everything—especially when it comes to deploying AI solutions. Enterprises are increasingly relying on AI powered by real-time data to drive smarter, faster decisions. However, data often remains locked in silos between operational systems (that power day-to-day activities) and analytical systems (where insights are derived). The expanded partnership between Confluent and Databricks is set to transform this paradigm, dramatically simplifying the integration between these environments.

Bridging the Gap Between Operational and Analytical Data

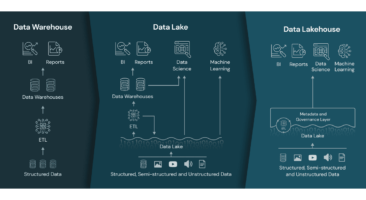

Traditional data workflows require manual, batch-based processes to move data between systems. This not only slows down time-to-action but also risks losing data governance and lineage along the way. As described in the Confluent blog, many enterprises struggle because their AI models are trained on stale data, while real-time applications suffer from delayed insights.

Key Pain Points Addressed:

- Data Silos: Operational data powering applications and analytical data driving decision-making exist in separate silos.

- Manual Processes: Batch jobs that transfer data are slow, brittle, and often result in lost governance and data context.

- AI Inefficiencies: Outdated or fragmented data severely undermines the performance of modern AI models—especially for large language models (LLMs) and agentic AI.

This fragmented ecosystem has long been a barrier to achieving real-time, automated AI decision-making. Enterprises need an integrated approach that ensures the data powering AI is both fresh and governed throughout its lifecycle.

The Confluent–Databricks Integration: A Game-Changer for AI

To address these challenges, Confluent and Databricks have developed a bidirectional, Delta Lake-first integration that unifies the operational and analytical worlds. At the heart of this innovation is Tableflow, a tool that converts Kafka logs into Delta Lake tables.

How It Works:

- Tableflow to Unity Catalog Integration: Operational data streams from Confluent’s Tableflow are ingested directly into Databricks’ Delta Lake, where robust governance is enforced by the Unity Catalog. This enables real-time data to flow seamlessly into the analytics and AI platforms.

- Bidirectional Data Flow: Not only does data flow from operational systems into analytical environments, but AI-generated insights can also be pushed back into operational systems. This enables automated, real-time decision-making—eliminating the delays of manual intervention.

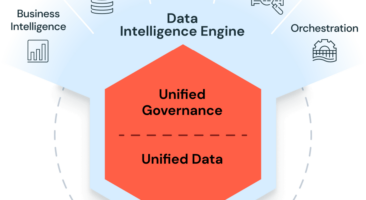

- Unified Governance: Integrating Confluent’s Stream Governance with Databricks’ Unity Catalog ensures that every data asset remains secure, traceable, and compliant across its journey. This unified approach builds a trusted source of truth for both data scientists and application developers.

As highlighted in the Confluent blog, this integration is designed to provide enterprises with “AI-ready, real-time data products” that are governed, reusable, and tailored for intelligent applications.

Tableflow: Enabling a Seamless Data Ecosystem

Tableflow takes integration one step further by leveraging the power of open table formats—starting with Delta Lake. This approach offers several significant advantages:

- Simplicity and Flexibility: By materializing Kafka logs as structured tables, Tableflow simplifies operations. Developers and data practitioners can work with unified schemas and namespaces without managing complex ETL pipelines.

- Enhanced AI Readiness: With data being continuously updated in Delta Lake, AI models can train on fresh, accurate, and contextualized information. This continuous feedback loop helps improve model accuracy and responsiveness.

- Optimized Operational Efficiency: Tableflow’s integration means that data no longer requires cumbersome batch processing. Instead, both operational and analytical systems work off the same live data, reducing manual overhead and enabling real-time insights.

- Future-Proofing Data Strategies: As enterprises increasingly rely on LLMs and agentic AI, having a streamlined process that unifies data across environments is not just beneficial—it’s essential. Tableflow’s compatibility with multiple open table formats (Delta Lake, Apache Iceberg, Hudi, and Paimon) ensures that organizations can adopt it without overhauling existing systems.

Unlocking the Power of AI with Trusted Data

By ensuring that AI models operate on real-time, trusted data, this integration paves the way for transformative capabilities:

- Faster Decision-Making: Continuous, live data feeds reduce the time-to-action from hours or days to mere milliseconds.

- Improved Model Accuracy: Real-time operational data ensures that AI models are constantly fine-tuned with the latest insights, leading to more accurate predictions and automated decisions.

- Seamless Automation: AI-generated insights can be automatically fed back into operational systems, enabling businesses to shift from reactive to proactive decision-making.

- Enterprise-Wide Collaboration: With a single, unified data source, cross-functional teams—from developers to data analysts—can collaborate more efficiently, accelerating innovation and value creation.

Looking Ahead: The Future of Real-Time AI Integration

The Confluent–Databricks partnership is only the beginning. Future enhancements are set to further deepen the integration by:

- Rolling Out Additional Integrations: Upcoming phases will introduce even tighter coupling between Tableflow and Unity Catalog, further streamlining data flows.

- Expanding Ecosystem Support: Additional open table formats and compute engines will be integrated, offering even greater flexibility and performance.

- Enhanced Governance and Compliance: With continuous improvements in metadata management and data lineage tracking, enterprises will benefit from even more robust governance frameworks.

As we look ahead, the fusion of operational and analytical data streams promises to unlock unprecedented opportunities for AI innovation—enabling businesses to harness the full power of their data assets.

Transform Your Enterprise with LoadSys

At LoadSys, we understand that successful AI deployment starts with having the right data at the right time. Our expertise in Databricks implementations and data architecture optimization can help your organization fully leverage the capabilities of the Confluent–Databricks integration and Tableflow innovation.

Ready to unlock real-time, trusted data for your AI applications?

Contact LoadSys today to schedule a consultation and discover how our tailored solutions can transform your data strategy.

Empower your business to move from reactive insights to proactive, automated decision-making—because in the age of AI, every millisecond counts.

Reach Us

Contact us for a free consultation.

We would love to hear about your project and ideas.